Shadowhands

As our final project for my Embedded Systems in Robotics class at Northwestern University my team decided to utilize the Shadow Hand robot hands attached to ABB seven-DOF robot arms to manipulate objects detected via computer vision.

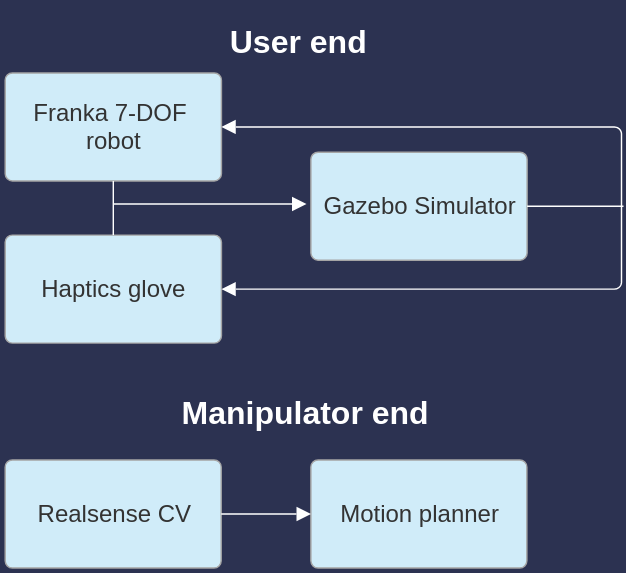

Alongside the ABB robots, we created a user-end virtual simulation of the environment utilizing RVIZ and Gazebo where the user could wear a Haptics glove and have a Franka arm attached to their wrist.

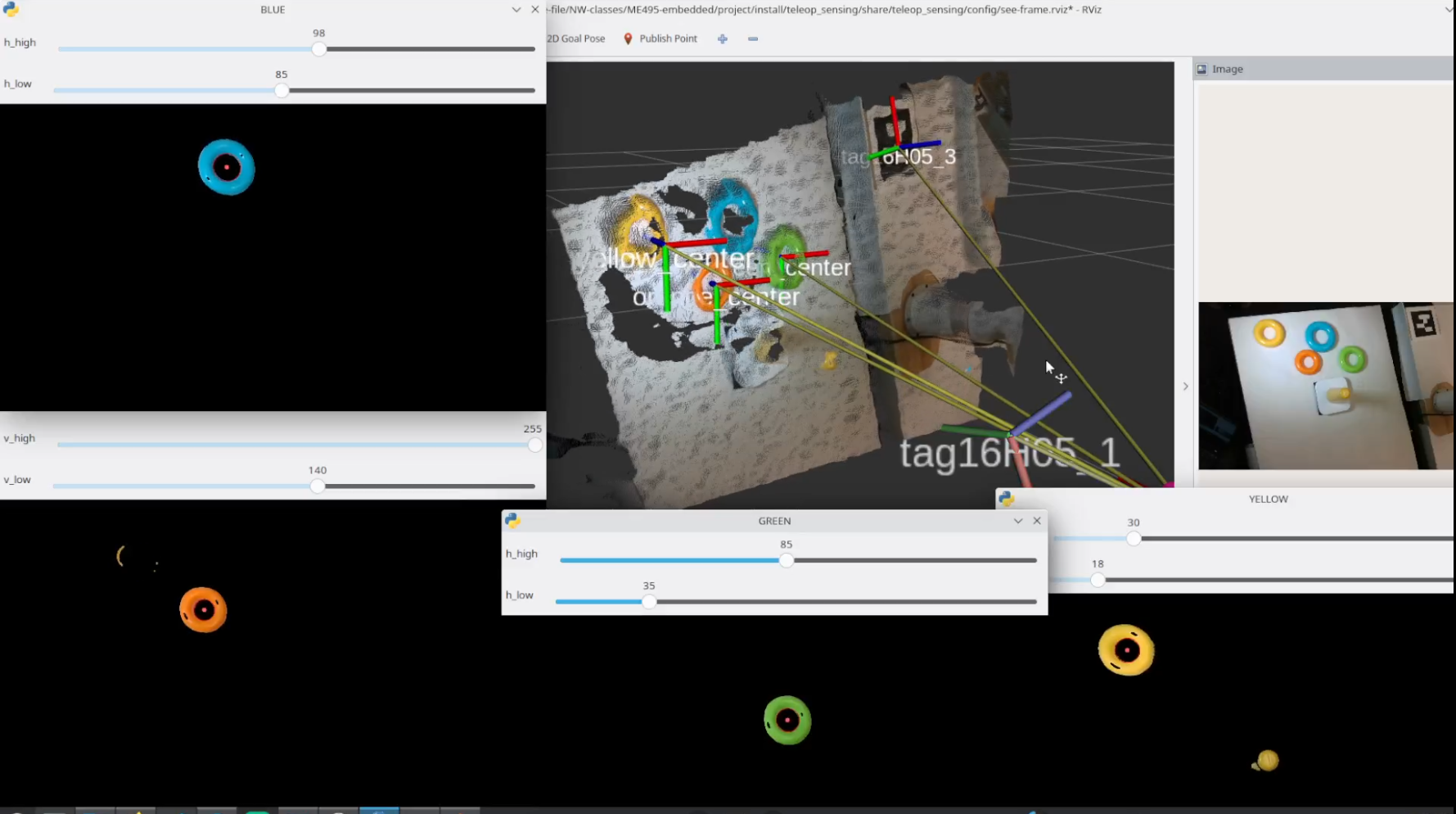

The transforms of each ring shown in RVIZ.

Table of Contents

Manipulator End

The manipulator end has both a computer vision and robotic manipulator portion to it. The computer vision side detects the locations of the toy rings on the desk and sends those positions to the motion planner that creates a series of actions for the robotic arm to execute to pick up the rings in order.

The computer vision on this end of the project utilizes color detection to detect which pixels correspond to which ring. By using other CV methods, such as contouring, the system more accurately determines the edges of each ring and then the center of each ring based on the average location of pixels of the same color as the rings.

The transforms of each ring shown in RVIZ.

Once the ring locations are known relative to the camera, known positions and rotations from the camera to the robot base are used to compute the locations of the rings relative to the robot. These locations are then sent to the motion planner to create a series of actions that will pick up each ring in order and stack them on the post in the center of the desk.

User End

The user end consisted of three parts: a haptic feedback glove capable of providing a gripping feedback to the user, a Franka seven-DOF arm attached to the user’s wrist to track position in 3D space and provide weight feedback, and a virtual simulation in Gazebo.

Because this project was intended to be a platform for delayed tele-operation, a locally run physics simulator was needed to provide fast and accurate feedback to the user as in an actual use case the user would be much further away from the manipulator end thus incurring much more delay in feedback. Gazebo was the simulator our team decided on because of its integration with ROS2.

To reduce feedback delay, we utilized a locally run physics simulator called Gazebo due to its accuracy and integration with ROS2. Since the user would be much further from the manipulator end in an actual use case, Gazebo provides reduced feedback delay when compared to the delay that would be incurred over

Though Gazebo is a physics simulator, it does not allow users to program robot positions directly into the simulation. Our simulated hand had to take commands from a controller where the current real-world positions of the user’s hand and fingers were the goal positions the controller was aiming for.

Me using the haptic glove to demo the user feedback.

The Franka robot that is attached to my wrist is constantly using kinematic equations to determine the location and rotation of my wrist relative to the base of the robot. That data is then sent to the Gazebo controller and used as desired positions.

The corresponding gazebo simulation using my hand and finger motions as input.

Future Work

Improving controller tuning.

While the physics simulator is very good at simulating freely moving objects such as the rings, the controller that moves the hand around the scene would need tuning before the project could be used in an actual scenario. Though tuning the controller was out of scope for a project this size, ROS2 contains tools to tune controllers and Gazebo has the capability to make the hand model more accurate so that the random flipping wouldn’t occur.

Saving user input motion for future playback

Future iterations of this project would need to include trajectory storing so that it could be run later on the manipulator end of the project. For example, if the user makes a mistake in their execution, that motion data could simply be deleted and not sent to the manipulator end, where as a directly controlled manipulator would be more prone to user errors.